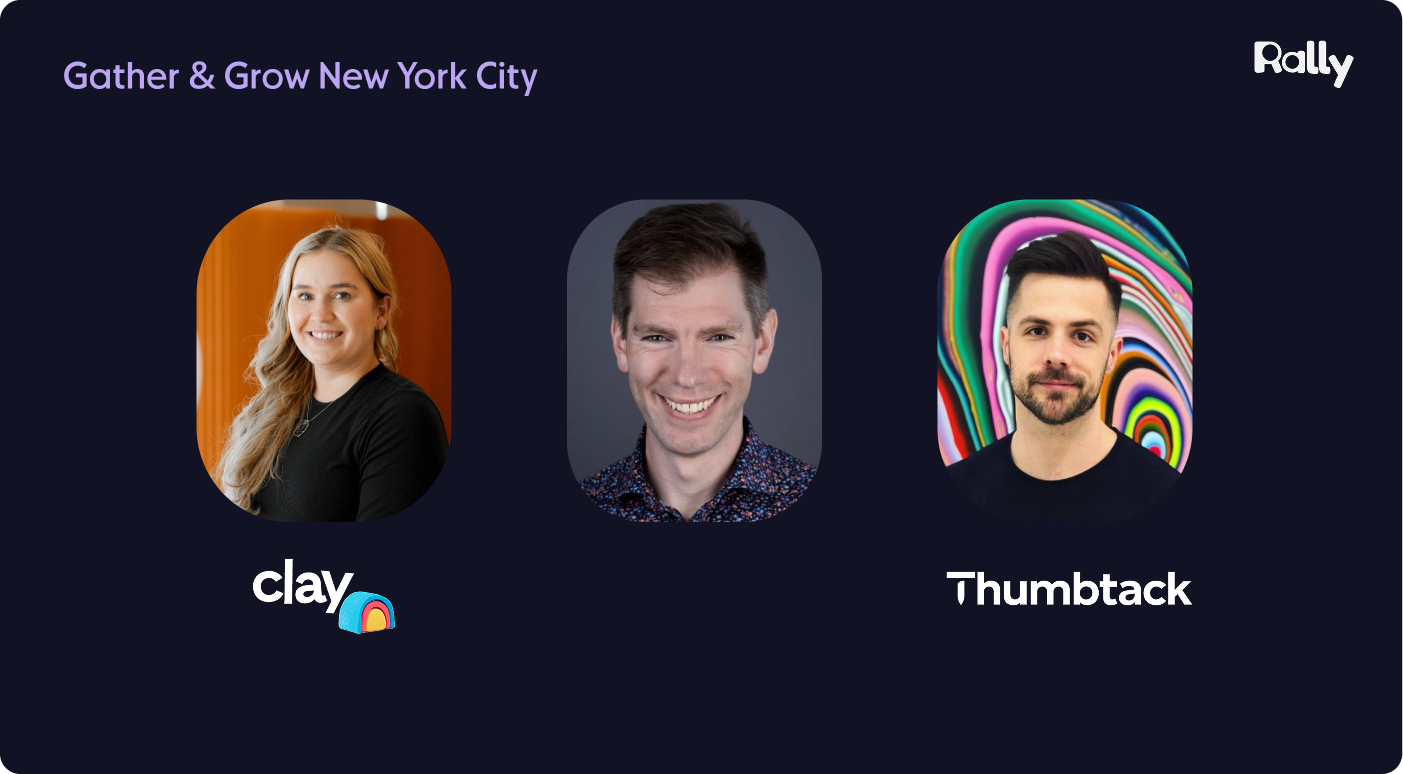

Myths & hot takes: AI in Research – Researchers from Clay, Thumbtack, & Webflow bust the biggest myths about AI

AI is changing research faster than anyone expected. New tools promise efficiency, stakeholders want answers yesterday, and suddenly every team is trying to figure out what it means to “do research” in an AI world. For some, it’s exciting. For others, it’s a little terrifying.

Gather & Growth 2025 turned up the heat with a live debate on AI in research: what’s hype, what’s helpful, and what’s actually changing how research gets done.

👋 Jamie Crabb, UX Research Lead at Clay (formerly of Notion, Meta, and Patreon), has spent her career helping teams scale insights responsibly. Recently, she’s been exploring how AI can help non-researchers learn to think – and act – like researchers.

👋 Brad Orego, independent research leader and former Head of Research at Webflow and Auth0, brings a data-driven, product-minded lens to the conversation. He’s known for using AI to make research more strategic, integrated, and impossible to ignore.

👋 Sebastian Fite, Senior UX Researcher at Thumbtack (previously Instagram and Facebook), blends ethnography with design thinking to uncover how people connect, create, and buy. He’s especially interested in where human intuition and AI automation meet.

If you weren’t able to join us live, or just want a refresh on the insights shared, keep reading or watch the recording.

Want more from Gather & Growth 2025? Check out our other panel recaps:

Is AI too early to use right now?

Jamie: I don’t think AI is too early to use right now. Other people in our industry, other researchers and practitioners in data spaces, are already using these tools, and it’s going to become an expectation that we use them too.

The important thing is understanding the boundaries you want to set for how you’re using it in your own practice, and what makes sense for your company and culture. It’s going to be happening somewhere in your organization, whether or not you’re involved, so it’s really important to define how it interacts with your data.

That’s the part that scares me: People putting my studies into their own GPTs without me knowing.

Brad: If you’re not using AI right now for basic tasks like note-taking or transcription, you’re holding yourself back. AI is already effective for many tasks.

It’s also on us to familiarize ourselves with the tools as they come out. That includes the big ones like Gemini and ChatGPT, but there are many smaller, niche tools too, such as AI-assisted coding platforms that help us prototype or automate tedious steps.

From a professional development standpoint, we should all be trying these tools, learning where they’re effective and where they’re not.

Sebastian: Most people have tried AI at some point, and their first experience probably wasn’t great. You think it’s going to solve all your problems, then you try a prompt and it doesn’t work.

It’s a brand-new tool, and we’re all collectively figuring out how to use it, not just in research but across every industry. So give it another shot. The models are advancing quickly, and we’re all learning how to prompt and set up context to get better output.

“It’s a brand-new tool, and we’re all collectively figuring out how to use it, not just in research but across every industry. So give it another shot.”

– Sebastian Fite, Thumbtack

Try different models for different use cases. I’m a heavy Gemini user right now, and 2.5 Flash versus 2.5 Pro are completely different experiences. Getting to the output you actually want takes experimentation.

Be very clear about what you want before you start prompting. Define the output first. If you don’t know what you’re trying to get, it’s almost impossible to write the right input.

Will AI replace researchers?

Brad: We’ve heard this fear before with research democratization. People said the same thing, that democratization would take our jobs. My take is, if democratization or AI can take your job, you probably weren’t doing a valuable job to begin with.

AI is forcing us to rethink what value we actually provide. Is it writing research plans, gathering data, doing analysis and synthesis, or building slide decks? Probably not. The real value that research provides is understanding the business, de-risking decisions, uncovering opportunities, and helping the company make better decisions.

“AI is forcing us to rethink what value we actually provide.The real value that research provides is understanding the business, de-risking decisions, uncovering opportunities, and helping the company make better decisions.”

– Brad Orego, ex-Webflow

If you step back from the tactical things that got you to this point in your career and focus on those bigger outcomes, AI won’t replace you. In fact, it can help you do more of what’s valuable because it takes repetitive work off your plate. Things like moderating sessions, transcribing, or spending hours watching recordings are all time sinks that AI can handle. That lets us focus on the work that matters.

Jamie: I definitely don’t think AI will replace me. But I do think it creates some challenges for newer or junior researchers, especially people shifting into research from other roles.

Right now, early-career researchers are being pushed straight into deeper strategy work. They’re expected to be more strategic much earlier because they’re no longer doing the groundwork tasks that used to build those skills, like tagging, transcription, or running brainstorms. Those things can now be handled by AI.

That work is still meaningful, especially when you’re learning, because it helps you understand how insights come together. So I think early-career researchers need to be selective. Sometimes you should intentionally do the manual work – listen back to your interviews, transcribe them yourself, pull quotes – just to strengthen your own understanding. It helps you become better informed about what you’re asking AI to do.

We also need to provide mentorship and protection for junior researchers so they can build those foundational skills before relying too heavily on AI.

Sebastian: If we take something like a typical user interview, there’s so much happening that AI simply can’t capture right now. The context and environment that someone is in, their emotional state, the differences between what they say and what they do – those are all things researchers interpret in the moment. Current models can’t do that.

“AI is a supporting tool, not a replacement. And it’s not just happening in research. Every field is figuring out how to use these tools to extend, not replace, human judgment.”

– Sebastian Fite, Thumbtack

I’ll also say this worry about AI replacing people isn’t unique to research. For example, I became, briefly and unfortunately, the face of “vibe coding” in my organization. After a brainstorm, I used an AI-assisted coding tool to create an interactive prototype. It spread internally because it looked cool and fast. But I would never claim that it was production-ready or that I suddenly became a designer or engineer. It was simply a way to communicate ideas better.

That’s how I see AI in our field too. It’s a supporting tool, not a replacement. And it’s not just happening in research. Every field is figuring out how to use these tools to extend, not replace, human judgment.

I cannot use AI at work because of company “reasons.” What should I do?

Sebastian: “Reasons” is not really a sufficient reason. From a professional development standpoint, you are competing with everyone else who is learning these tools, so it is important to know what works, what does not, and what the best practices are.

You still need to engage with your data directly, look through your transcripts, develop your own coding schemes, and understand what is happening yourself. When I first started using AI tools, I noticed they often pushed toward the end deliverable. But the real value is in the intermediate steps: generating research questions, writing screeners, and engaging with your data before you let AI summarize it. That is how you build better intuition and keep your skills sharp.

Jamie: I have heard this same concern internally, both at my current company and when I worked at Notion, where we were dogfooding every AI tool while building our own AI product. The two reasons I hear most often are: one, “it is cheating,” and two, “it hallucinates.”

The first one, feeling like you are cheating, comes from the idea that you are discrediting your own training or expertise. I understand that. But we already have rules and structures for how we work with data, check our biases, and turn raw data into claims. Those standards still apply.

My counterpoint is that AI can actually help you check your own biases. I have used it that way many times. For example, I will put my report into a tool like Marvin that has access to the raw data and ask it, “Does this claim actually hold up?” Sometimes it tells me no, that I am referencing an insight from a past study or misinterpreting something. That has been really valuable.

“AI hallucination is real. My temporary solve is to be very intentional about which models I use and what data I share with each. I choose tools strategically.”

– Jamie Crabb, Clay

The second reason, hallucination, is real. My temporary solve is to be very intentional about which models I use and what data I share with each. I choose tools strategically. For example, raw transcripts only go into one tool that is dedicated to analysis. Another tool, like Claude, knows how I write and how I like to communicate, so I use that for polishing or presenting insights. I am deliberate about what information each model gets, and I think that is key to reducing risk.

Brad: Fear is often the biggest reason people say they cannot use AI. It is a convenient excuse. It is okay to be nervous; this is new technology, but you can start small and use it safely.

If your company does not have approved tools yet, talk to leadership about finding ways to experiment securely. You can use AI to generate things like survey or interview questions without sharing any private data about your business or users. There are safe use cases in every organization.

“If you are not using AI at work, use it in your personal life. Plan a vacation or outline a project, anything that gives you more exposure. Every time you use it, you get better at prompting and understanding how it works.”

– Brad Orego, ex-Webflow

And even if you are not using it at work, use it in your personal life. Plan a vacation or outline a project, anything that gives you more exposure. Every time you use it, you get better at prompting and understanding how it works.

You can even ask the model to help you write a better prompt, for example, “Help me phrase this for ChatGPT or Gemini.” These tools do that really well now.

Things change quickly. Anthropic recently started recommending XML instead of Markdown for prompts. That came out just last week. It is always evolving. The point is to keep using it, keep practicing, and build that intuition.

Is AI only helpful for qualitative research?

Brad: I think AI might actually be better at quantitative research than qualitative research. We have decades of experience as humans interpreting nuance, tone, and body language. AI does not have that. Qualitative research is messy. Quantitative research is numbers, and AI is very good with numbers.

Instead of spending hours building spreadsheets, formulas, and pivot tables, you can give your survey data to an AI model and ask it questions. It can summarize the results, visualize patterns, and even generate graphs. You still need to check for hallucinations, but it can save you a lot of time.

You can also have it write SQL queries for you, or use it to check your math before you go to a data scientist. I am not saying you do not need data scientists, but you no longer have to bother them with every small, low-level request. If you limit AI only to qualitative work, you are missing out on what it can do for quantitative analysis.

Jamie: I agree. Computers are math, and AI naturally fits into quantitative workflows. But I also think there is a bias behind the idea that AI is better for qualitative research. Sometimes we feel more comfortable relying on numbers because they seem objective. There is a sense of confidence that comes with saying, “I did the math, so I know it is right.”

“I do not think AI is the right tool for qualitative work because there is such a human element involved. Building rapport, reading emotion, interpreting nuance – those things cannot be automated.”

– Jamie Crabb, Clay

When I think about people assuming AI is better for qualitative research, I wonder if part of that comes from already undervaluing qualitative research. I do not think AI is the right tool for qualitative work because there is such a human element involved. Building rapport, reading emotion, interpreting nuance – those things cannot be automated.

That said, I do use AI in quantitative ways. For example, my data scientist might send me a query, or I might write a very rough SQL script myself. Before I send it back or ask for help, I paste it into a model and say, “What does this do?” If the explanation makes sense, I know I am on the right track. It is a great way to build confidence and catch small mistakes.

Sebastian: I am primarily a qualitative researcher, but I am sometimes asked to run surveys. I have a limited working knowledge of R, which has been on my list to learn for years. AI helps bridge that gap. I can generate a script, review it, and make small edits rather than waiting on someone else to write it for me.

“AI helps me plan studies, refine questionnaires, and draft research plans. I see opportunities for it to plug into every stage of the process, not just one method.”

– Sebastian Fite, Thumbtack

It is useful for more than just analysis. AI helps me plan studies, refine questionnaires, and draft research plans. I see opportunities for it to plug into every stage of the process, not just one method.

If AI makes research easier, will it water down the craft?

Sebastian: This concern comes up anytime a new tool enters the research process, especially when people outside the research team start using it. At Thumbtack, we have a process where designers can lead research with us in the loop. I often find myself consulting more than I expected to.

If AI helps those teammates get to a first draft of a strong questionnaire or study plan, that makes my job easier. It helps me help them. I see that as a benefit, not a threat. Democratization is not as scary as it sounds.

Jamie: I am currently the only researcher at my company, and I have not yet built out a team. Most of the research is done by PMs, PMMs, and designers. We are definitely democratizing research, and AI plays a big role in that.

“To me, research quality is not about whether AI was involved. It is about process, relationship-building, and principles.”

– Jamie Crabb, Clay

Used properly and within guardrails, AI can make the process faster and more consistent. To me, research quality is not about whether AI was involved. It is about process, relationship-building, and principles. It is about keeping things ethical and compliant.

There is always a risk with new tools, which is why we set clear standards for when and how to use them. But if AI helps me create a slightly less biased survey or speeds up my analysis, that is a good thing. As researchers, we already know where the quality bar is. Using AI to extend that reach can actually be a superpower.

Brad: I think AI can actually strengthen the craft of research. When I was at Webflow, we built a research planning assistant so PMs and designers could start their projects with structure. Instead of coming to a researcher cold and saying, “I want to do a study on this,” they first went to the bot.

We trained it on dozens of our best research plans and spent months fine-tuning the prompts. The output was surprisingly strong. It gave people a solid starting point that followed our standards and templates. That freed up the research team to focus on higher-level guidance instead of rewriting the basics every time.

I can imagine a future where there is a co-pilot in the research session itself. It could flag when you ask a leading question or remind you about a topic you forgot. We are not there yet, but I think AI will eventually make it easier to maintain quality at scale.

People are going to run research on their own whether we like it or not. If AI can be the guardrail that keeps that work consistent, that is a win.

What are your favorite AI tips or tricks for researchers?

Brad: Use AI to critique your work. I often throw my entire research plan or summary into a model and ask it to tell me what is wrong with it. Even if the feedback is not perfect, it helps me see things from a different perspective.

“Use AI to critique your work. Even if the feedback is not perfect, it helps me see things from a different perspective.”

– Brad Orego, ex-Webflow

Experiment with different models and temperature settings. The temperature controls how creative or consistent the model is, from 0 to 1. You can also assign roles or perspectives, like “you are the VP of Product” or “you are the CMO,” and ask it to give feedback from that point of view. It is a great way to uncover blind spots or new ways of thinking.

Even if you do not use any of the suggestions, it helps you reflect and sharpen your reasoning.

Jamie: When I first started using AI for research tasks, I was frustrated. I thought I had to get really good at writing perfect prompts to make it work. Now the models are advanced enough that I do not have to structure things as much to get useful results.

My favorite trick is to brain dump. I open voice input on my phone and talk for ten minutes as if I am presenting my findings to a teammate. Then I feed that transcript into an AI model and have it summarize what I said. It helps me process what I think I heard, identify key insights, and turn unstructured thoughts into something usable. It is a great way to debrief yourself after a project.

“I have a collection of AI personas that represent different stakeholders with different motivations. I run my research summaries or recommendations through them and let them argue with me until I can defend my point of view.”

– Sebastian Fite, Thumbtack

Sebastian: I use what I call “adversarial gyms.” I have a collection of AI personas that represent different stakeholders with different motivations. I run my research summaries or recommendations through them and let them argue with me until I can defend my point of view.

A big part of our job as researchers is persuasion, helping people make the right decisions for users. This kind of role play prepares me for those conversations.

Another trick is to experiment with synthetic users. If you work on a two-sided or multi-sided marketplace, you rarely get to observe both sides interacting in real time. You can simulate that by using synthetic participants as part of a research session. You can connect models through an API and create a realistic conversation between two user types. It is not a replacement for real participants, but it helps test concepts and communication flows early.

How can AI be used collaboratively within research teams?

Sebastian: This might be a broad definition of “collaboration,” but tools like Notebook LLM have been very effective for me. I use it to create a focused research database or repository around a specific topic. That allows other researchers or stakeholders to self-serve when they have a question like, “What do we know about this?” It is especially helpful when someone new joins a project or wants quick access to past insights.

Jamie: I promise I am not on the Marvin sales team, but having all our research in one repository that AI can access with context has been really powerful. My non-research teammates also add their data, so everything lives in one place. That makes it easier for AI to find connections across studies and surface insights faster.

If I am working on something that I did not personally write or analyze, I often turn to Notebook LLM because I trust it the most for structured, source-based answers. It is great for helping others explore the research library safely without losing context.

“When you have a team of researchers, you already have that human feedback loop. AI just helps free up time so those discussions can happen sooner because you are not stuck transcribing or doing manual cleanup.”

– Brad Orego, ex-Webflow

Brad: I do not think AI will fundamentally change how we collaborate with other researchers. Collaboration still starts with having a clear idea of what you are trying to learn, why you are doing the research, and what the goals are.

AI can help you build or critique a research plan, or it can assist with analysis, but it is more useful when you are working independently. When you have a team of researchers, you already have that human feedback loop. AI just helps free up time so those discussions can happen sooner because you are not stuck transcribing or doing manual cleanup.

How do you balance letting stakeholders use AI tools for insights versus keeping research quality high?

Brad: The most important thing to remember is that if something goes wrong with an AI output, the AI will not be held responsible. You will. That is why you have to be very aware of what is being produced and how it is used.

Give stakeholders access to the tools, but set clear expectations. Tell them they can use AI to explore data and generate insights, but before they take action, they should run their output by a researcher. Most of the time it will be fine, but sometimes you will catch something that is off.

I recommend letting them use AI for first drafts, summaries, or exploratory questions, then having a researcher review those results before they are shared or used to make decisions. That approach encourages learning while keeping quality control in place.

Jamie: The responsibility for deep, meaningful research still sits with the research team. I use a framework that looks at urgency and risk. Not every question a team asks is high risk.

If a request is low risk and based on something we already know well, I am comfortable letting a stakeholder use AI to pull an answer or summarize insights. But if the question involves something new, sensitive, or high impact, that is when I want them to flag me in. Those are the moments where human review is critical.

Giving teams freedom on the low-risk, high-speed questions helps them move faster, but it also makes it clear when to bring a researcher in for oversight.

“The key is keeping a “researcher in the loop” mindset. Stakeholders can and should use AI to explore data and surface ideas, but there always needs to be a researcher validating the final output.”

– Sebastian Fite, Thumbtack

Sebastian: The key is keeping a “researcher in the loop” mindset. Stakeholders can and should use AI to explore data and surface ideas, but there always needs to be a researcher validating the final output. That is how you get the best of both worlds: speed from the tools and rigor from the people.

When is it safe to use synthetic participants, and when should we rely on real ones?

Sebastian: When I mentioned synthetic participants earlier, I was talking about creating more realism between two parties in a study. For example, if you are running research in a two-sided marketplace, you can use a synthetic participant to simulate the other side of the interaction so a real participant has someone to engage with. It is not meant to replace real people; it is just a way to make the experience more natural and dynamic.

Jamie: I have yet to find a case where I trust synthetic participants enough to use them as part of real research. I have seen some interesting academic examples, especially in cases where using real participants might not be safe or feasible, but for product research, I do not find them reliable.

“The value of User Research comes from real people whose lives, behaviors, and environments are always changing. That constant change is what gives the work meaning.”

– Jamie Crabb, Clay

It is much easier to train a model on myself – on my understanding, my domain knowledge, my tone – than it is to train one on a thousand fake users. Even if you could, the value of User Research comes from real people whose lives, behaviors, and environments are always changing. That constant change is what gives the work meaning.

If I had to choose, I would use a platform like Mechanical Turk first, even with all its limitations, before I would trust synthetic participants.

Brad: The UXR Guild did a great talk on synthetic users that I recommend watching. They made a really good point about using synthetic participants to stress test your research, not to replace it. You can use them to pilot a study, test your questions, or preview what a dataset might look like, but not as actual participants.

If you simulate a thousand responses, that can help you catch errors or weird patterns in your study design before you launch it. But that data should never be used as the real insight source.

Anytime you train a model, you flatten the peaks and valleys in human behavior, and those extremes are usually what researchers care about most. We learn from the edge cases, the surprises, the contradictions. Synthetic users remove that.

“I do not think synthetic participants can replace humans. There may be small uses in the research process, but the core of User Research still requires real people.”

– Brad Orego, ex-Webflow

For that reason, I do not think synthetic participants can replace humans. There may be small uses in the research process, but the core of User Research still requires real people.

Any final thoughts on how AI will shape the future of research?

Brad: The researchers who thrive will be the ones who experiment, stay curious, and learn how to use these tools to focus on strategy, storytelling, and influence.

If AI can take away repetitive tasks like transcription, note-taking, or formatting reports, that frees up time to think more deeply and contribute more strategically. It lets us spend more time connecting dots and guiding product direction.

Jamie: I think it is less about being afraid that AI will take your job and more about learning how to partner with it. The researchers who know how to use AI to make their work faster and sharper will become even more valuable.

Let AI handle the mechanical tasks so you can spend your time on the things only humans can do, like judgment, prioritization, and empathy. The skill to develop now is knowing when to lean on AI and when to trust your own intuition.

“The more we treat AI as a collaborator rather than a replacement, the better the outcomes will be. AI can help us move faster, but it cannot replace that human perspective.”

– Sebastian Fite, Thumbtack

Sebastian: The more we treat AI as a collaborator rather than a replacement, the better the outcomes will be. AI is great at producing structure and efficiency, but it is not good at understanding people or context.

Our value as researchers comes from interpreting nuance, empathizing with users, and understanding what motivates human behavior. AI can help us move faster, but it cannot replace that human perspective. The future of research will be a partnership between the two.

🔥 Huge shout-out to Jamie, Brad, and Sebastian for keeping it real about AI’s role in research.

The future of research isn’t man versus machine, it’s the people who know how to make the two work together.